Randomness and unguided evolution in a clockwork universe

Think about how we instinctively connect randomness to a property inherent in a process, while it is, instead, a relative concept: What appears to be totally random in some experimental setup might well turn out to be predictable in another one (or for someone else).

For example, contrary to common belief, tossing a dice is an indeterministic or deterministic process depending on how we observe it. We tend to think of it as indeterministic because it is a highly complex sequence of classical physical processes that is almost impossible to follow, measure, and predict. However, in principle, we could predict the outcome of a dice toss. In fact, if one has all the physical parameters, the initial and boundary conditions of the dice, as well as a powerful numerical tool that calculates the dynamics of the event, one could ideally predict the outcome. Tossing a dice is a macroscopic dynamical process that can be described by the laws of classical physics and, in principle, could be predicted if we have complete knowledge of all that is needed to calculate the outcome. Coin and dice tossing are deterministic processes because if they were tossed with exactly the same initial and boundary conditions, the outcome would be the same.

This makes a random event a predictable one–that is, not random–only based on our knowledge. Randomness should not be confused with an intrinsic property of an object or a system like the mass, the electric charge, or the spin of a particle.

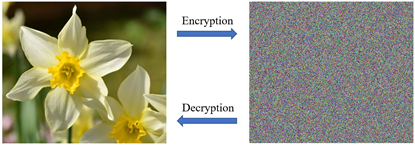

Another example along the same lines is encryption technology. This has been created just for a purpose: to transform sensor data into a stream of data that has been optimized to appear patternless–that is, random. It transforms a stream of bits according to a complicated informational protocol into a complex sequence that by all means appears purely coincidental. But what in the encryption is seen as just noise without any regularity, let alone meaningful structure–that is, a stream of apparently purely coincidental data dominated by chance, without meaning and purpose–suddenly makes sense once decoded with a key or password and reveals the presence of something meaningful.

Therefore, in a certain sense, randomness is in the eyes of the beholder according to what one knows, not something inherent in a process or the ‘world out there.

However, much too frequently, even top scientists or philosophers forget about all that when expressing philosophical viewpoints that continue to connect randomness with the idea of purposelessness, aimlessness, lack of a goal or intelligent design, etc.

Moreover, what further fuels the confusion is the distinction between only apparently random phenomena, so-called ‘pseudo-random processes’ and ‘truly’ random processes, or between ‘deterministic’ and ‘non-deterministic processes’, with the latter meaning that for the same input, you will nevertheless see the system behaving with different outputs.

Let’s first consider things in the domain of classical physics.

Is this distinction warranted? Can we come up with a single example of a ‘truly’ random process?

The distinction is only intuitive and colloquial, not scientific. For example, you will hear that coin tossing is a ‘truly’ random and non-deterministic process. But we have already seen that it is easy to show, with a thought experiment, that this is not the case. Whether or not something is predictable depends on what you know and how you look at it.

Also, so-called ‘random number generators’ on a computer are usually only very complicated deterministic programs that create sequences of pseudo-random numbers–that is, generated by a deterministic algorithm. The algorithm is designed to be so complicated that its output appears to be a random sequence. When we talk about the random generators of a normal computer, we mean a program that ‘hides’ its variables. If we could know all these hidden variables and the algorithm that stands behind them, we would be able to predict the outcomes, at least in principle.

It is the conception of randomness that makes up all classical statistical mechanics and that is historically rooted in Galilean and Newtonian physics. This is what, in 1814, French mathematician and philosopher Pierre-Simon Laplace realized and posited about the conception of a purely deterministic and, at least in principle, completely predictable universe. Laplace framed this in a thought experiment with what nowadays is known as ‘Laplace’s demon’ and that still is the (undeclared and implicit) assumption of modern materialism. Laplace’s daemon is widely known for illustrating a strictly causally deterministic universe in a reductionist form that leaves almost no space for teleological speculations. Laplace wrote:

“We may regard the present state of the universe as the effect of its past and the cause of its future. An intellect which at a certain moment would know all forces that set nature in motion, and all positions of all items of which Nature is composed, if this intellect were also vast enough to submit these data to analysis, it would embrace in a single formula the movements of the greatest bodies of the universe and those of the tiniest atom; for such an intellect nothing would be uncertain and the future just like the past would be present before its eyes.”

Such a classical deterministic ontology could, at best, admit a deistic conception in which final causes, if any, could be traced back only to the beginning of the universe when such an ‘intellect’ must have predetermined with utmost precision the initial conditions of every particle to obtain a specific desired dynamical evolution “of the greatest bodies of the universe and those of the tiniest atom” in the billions of years to come. Such a deity would have initially imparted the first ‘kick’ to the universe but then stood back without further intervention, leaving it to its own predetermined destiny.

While this kind of almost complete deterministic theology looks like a desperate retreat to save a theistic worldview, a Laplacian worldview that also denies the existence of such ‘intellect’ ends up in an entirely deterministic cosmology that looks equally, if not even more, extreme. In fact, it implicitly assumes that all the events in the universe not only are determined by an inescapable causal chain of causes and effects in the future but were also predetermined in the most distant past state of the universe. Our present physical state, in all its minutest details, was already inscribed in the initial conditions of the universe at the time of the Big Bang. This means that, for example, all Shakespeare’s sonnets, Mozart’s music, Newton’s Principia, every painting of Van Gogh, Einstein’s genius, and all human literature, sciences, arts, etc. were already present and somehow contained in every detail in a primordial hot, opaque and messy plasma 13.8 billion years ago. Such an inescapable deterministic Laplacian causality inevitably leads to a universe where everything must have been present in a potential form from its beginning, like in a huge book whose every page was already written in advance in a superdense, superhot, and structureless clump of hydrogen with only a few traces of helium and lithium and no other heavier elements, let alone stars or planets. If indeed, this was the case, the obvious question is: How did that fantastic story get there? Who or what encoded that epic adventure into a primeval soup?

While some might be willing to embrace a deistic or purely Laplacian cosmology, both of these positions are the result of the ultimate logical consequence of two opposing hypotheses pushed to their extremes and that hardly can avoid causing some reluctance. The former is forced to posit a purely mechanistic universe with a rather indifferent (indeed, demonic) deity looking at the pains and strives of its creature like in a movie where it plays no role. The latter is forced to posit from the outset the outcome of an evolutionary process already at its beginnings: a quite perplexing philosophical move considering that it was supposed to eliminate any teleological innuendos!

Nevertheless, pondering these strictly deterministic ontologies has pedagogical usefulness in clarifying the semantics of words like ‘randomness’, ‘accident’, ‘chance’, etc. From the perspective of the Laplacian daemon, these concepts have no meaning. Nothing was, is, and ever will be random or happen by chance because everything is predictable in all its details. These are only human labels to which we resort when we are ignorant of all the parameters characterizing a phenomenon and that, therefore, we cannot “embrace in a single formula.” In the context of classical determinism, the phenomena that appear to us as completely random are also completely determined and predictable, depending only on who the observer is and having nothing to do with the phenomenon itself. The difference appears only in our minds and has no ontological correspondence.

That is why resorting to statistical arguments invoking the role of random mutations or whatever accidental events causing genetic variations and, thereby, steering an undirected Darwinian evolutionary process is an empty inference. By doing so, one erroneously conflates a measure of randomness with an empiric test for the lack of directedness or pretends to prove, by seemingly logical inference, a lack of final causes which, however, were unconsciously posited already ab initio as an axiom.

So much for the so-called “unguided Darwinian evolution”.

While I was working on my Physics degree program, I was interested to understand what Randomness exactly is deeply; both scientifically and philosophically curious. I once came across a research paper on Coin Toss; they did a detailed study with all possible factors such as wind, resistance, angle, point of impact for the toss and the landing dynamics of a coin when it hits the ground. If we do know all the initial conditions it is theoretically somehow possible to predict with great certainty the outcome of the toss provided we have control and knowledgge of all the initial conditions. That will fall in the deterministic domain. I think the good easy way out is to give them a statistical treatment which I believe many good Physicists have done; but to understand the inherent nature of randomness — Is it a purely mathematical one or it could also be related on how we preceive information. There is some philosophical area to look at where is our understanding of randomness biased based on human perception; but that is circular as that means all knoledge is human centric and there are no objectivity — funny thing is that this runs and had led me into exploring epistemological anarchism; by the infamous “enemy” of science Paul Feyraband or Lakatos where no one would take this line of though seriously.