The binding problem and the emergence of meaning

[Excerpt from Vol. I of “Spirit calls Nature”]

Let us ponder an interesting feature of our conscious life: Binding. Binding remains a deep, unexplained mystery that surrounds our conscious perception and that could not find, at least not until our present day, a definite resolution. What philosophers of mind call the ‘binding problem’ relates to the fact that the brain is made of billions of cells and different working units which analyze signals and biochemical events in different regions and sometimes even at different times, but nevertheless presents all this to our conscious awareness as a unified and single experience which does not represent itself as being composed of innumerable events.

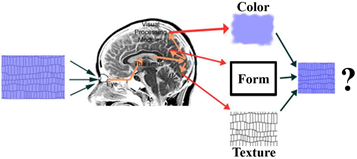

For example, although our brains process visual features such as colors, motion, and form in different specialized cortical areas using different neural pathways, we nevertheless experience these features united together into a single meaningful whole. Modern brain scanning devices have shown us that what we perceive as colors, shape, movements, and orientations of individual objects are all qualities and properties that the brain analyses separately in different areas. The brain does not compute them in sequential order but distributes information in parallel to different specialized areas where the characteristics of a single object are elaborated. Never in our brain does there appear an image or mapping of the world’s objects. There is no projection of a scene in the sense of which we tend to think. When we see a colored object, with a specific form, moving through space, its color, form, texture and direction of movement are ‘disassembled’, in the sense that different brain cells are activated for these different properties, independently from the other properties and the neighboring cells or brain areas. Not only that, but the result of this separate analysis of the object’s properties seems to not be transmitted to some central receiving station that might unify them again in a single unique representation but, instead, circulates throughout the brain. And yet, it is a quite common experience among everyone that a unified vision lastly occurs somehow. We don’t see the redness of a tomato as a property separate from its form.

A visual binding problem emerges: If visual properties of things, such as shape , texture and color, are distributed and analyzed separately throughout the brain regions, where, how, and when does the perception of a shape, with the perception of its color and texture become unified into a single experience of a colored object, come in? The binding problem emerges because of an unexplained feature integration. While neurophysiological and neuropsychological findings show the existence of multiple brain areas that respond to primary features of objects and its spatial locations scattering colors, movements, forms, and textures, throughout the cortex, our phenomenal experience nevertheless coalesces into a perceptual unity of bounded feature representation.

Fig. 10 Descartes’ brain model.

These findings of modern neuroscience make it clear how the Cartesian brain model, which René Descartes advanced to explain a supposed mind-body dualism, no longer holds. In this model, he advanced the hypotheses that all the stimuli from the outer world are somehow converted into impulses and codified in the brain conveying them all, as a unique stream of information, towards the pineal gland, which he believed to be the center of perception and understanding, as well as the gateway to an immaterial mind or soul. We can nowadays certainly dismiss such a simplistic theory.

However, the binding of visual features is only an example of a much vaster and complex ability of our conscious experience. As a further example, binding can also arise in a semantic context in our language. For example, read this sentence:

“A beautiful sunset.”

The meaning of this sentence is immediate to everyone and there seems to be no problem at all. But is the meaning of this sentence the resultant act of summing up the meaning of its components? How does the word “beautiful” relate to “sunset”? The words “beautiful” and “sunset”, separated from each other, give us a completely abstract (but at the same time very concrete!) perception which is very different from the sentence above. When these two semantic objects, “beautiful” and “sunset”, come together, a new qualitative subjective ‘perception of meaning’ comes into being.

Furthermore, things like ‘beauty’ and the light of the sun are intimately correlated to a subjective experience, and it is impossible to isolate them as single logical constructs devoid of any experiential perception and information. What this suggests is that to understand the meaning of a sentence, which appears from a conscious binding of the words in a single and unique semantic whole, the subjective experience must somehow enter the scene. A theory of a logical and relational construct of the words alone with which we build sentences is insufficient and not the real ‘engine’ that produces such binding and the process of meaning.

Another interesting phrase to analyze, and suggested by William Seager, is this [16]:

“Why did Tom betray Mary so suddenly?”

Is the meaning of this sentence clear and unique? It isn’t. Because, depending on where we place the emphasis, we can get three different meanings:

1) Why did Tom betray Mary so suddenly?

2) Why did Tom betray Mary so suddenly?

3) Why did Tom betray Mary so suddenly?

Here, we see that the meaning of a sentence isn’t simply in the words. We realize how the emphasis places the sentence in a specific context that can vary from time to time. We have exactly the same words and the same sentence, and nevertheless completely different semantic contents emerge. It should, therefore, come as no surprise that developing a theory of semantics that allows an AI software to understand meaning has been shown to be an extraordinarily difficult task. Such a theory could not take single words and combine them; it must also consider the whole context, which means the whole world in the experiential dimension of a subject.

The sense of the whole sentence is also determined by the position and choice of a single word or a couple of words and may lead to completely different, even opposite, meanings. This semantic effect is called the ‘Winograd schema’, in which a pair of almost identical sentences, differing in only one or two words, have the opposite meaning. One can infer the precise meaning only by knowing how the world is built and the overall context in which the objects and concepts to which the sentences refer are known. An example of a Winograd schema was developed by the American economist Gary Smith [17]. Consider these three sentences.

“I can’t cut that tree down with that axe. It is too small.”

“I can’t cut that tree down with that axe. It is too thick.”

“I can’t cut that tree down with that axe. It is too late.”

In the first sentence, the pronoun “it” refers to the axe, while in the second sentence it refers to the tree. In the last sentence, it refers to neither, and instead indicates a temporal delay. But how do we humans immediately know that? Because we know the meaning of the single words, the meaning of the objects of the world they indicate, their relation to each other, and, most importantly, all these objects and the world arise in us as a subjective experience. It is only when this happens that we can bind the words into an overall context with semantic content–that is, become aware of the meaning of the whole sentence. Machines fail miserably in this task, even with these simple and short sentences. There is nothing that understands, binds, apprehends, or experiences and, therefrom, can construct any model of the world, let alone a significant semantic content.

Many have been tempted to believe that sooner or later we would have discovered a logical relation between words that would have revealed to us how their coalescence emerges in a clear and unique ‘element of meaning’. But, curiously, after decades of research in artificial intelligence, the philosophy of language, and models of the brain, it seems that behind the construction of a unique and single meaning from such a simple and apparently trivial set of words stands a tremendously complex process of cognition. Any attempt to gain the meaning of a sentence considering it as the resultant act of bottom-up summation or aggregation of some elementary cognitive or logical ‘atoms’ did not succeed. Much more sophisticated deep learning neural networks in computer science have been developed but we are still far from being able to build machines that understand the meaning that words and sentences convey. As already mentioned, translation software programs are nowadays common tools in every PC, but these are by no means able to replace human interpreters. It is easy to see where automatic translation tools or voice recognition systems fail most frequently: when they don’t recognize the meaning and try to guess.

[1] “The causal role of alpha oscillations in feature binding”, by Zhang Y. et al. – Proceedings of the National Academy of Sciences of the United States of America, 2019.